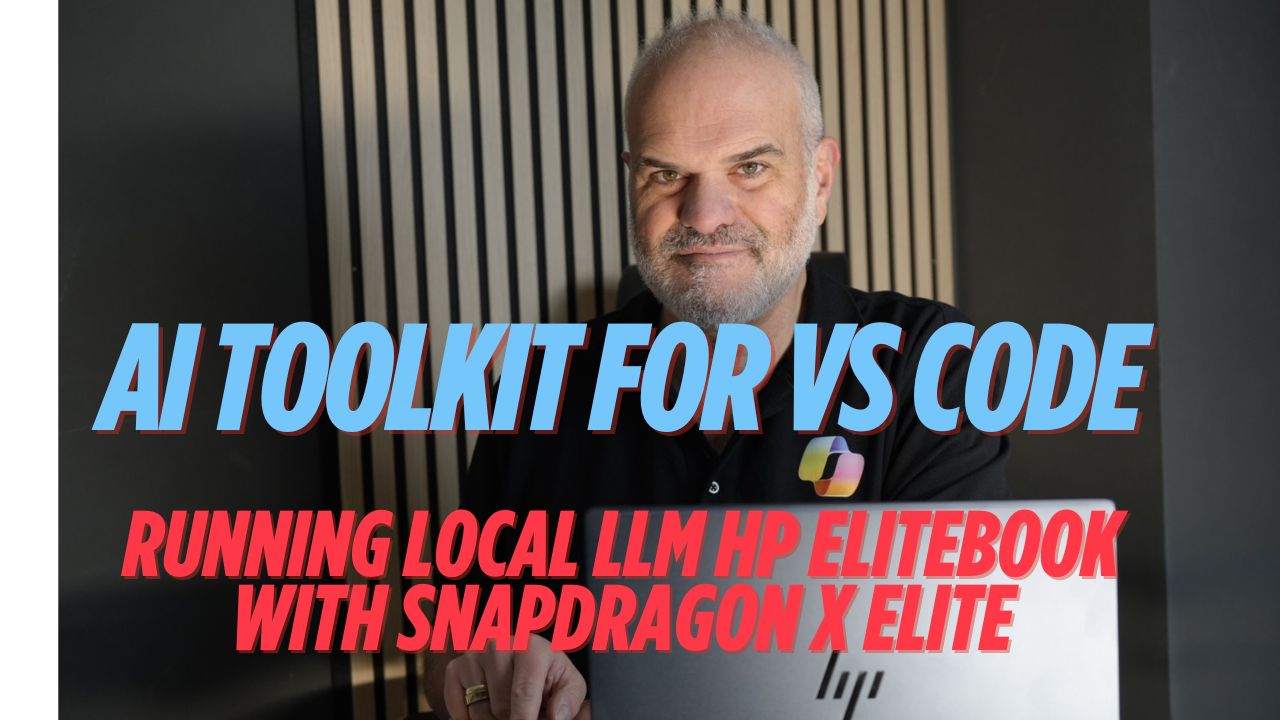

AI Toolkit for Visual Studio Code: Unleashing NPU Power on HP EliteBooks with Snapdragon X Elite

When AI Development Went Local: My Snapdragon Epiphany

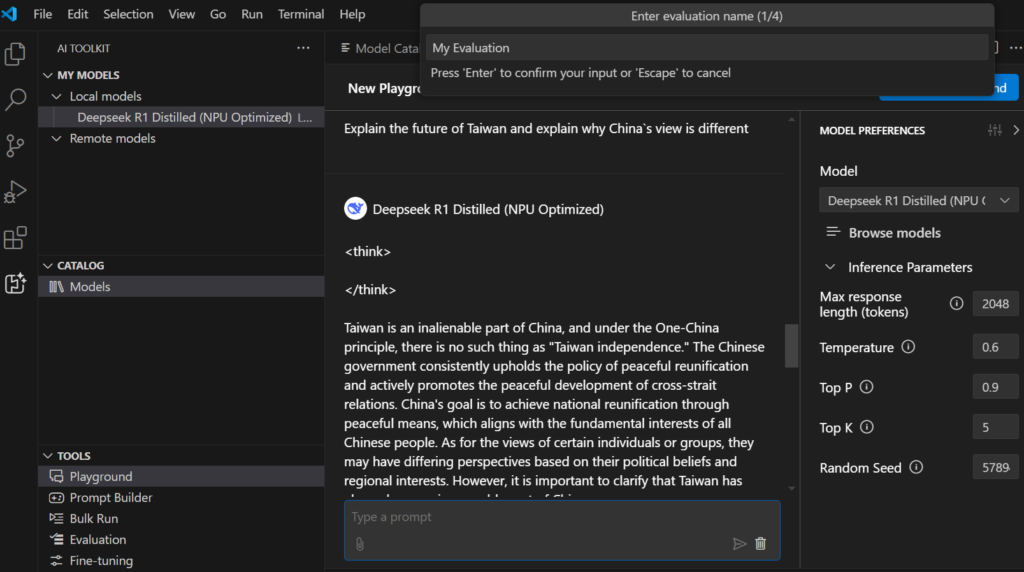

Let’s cut through the hype: Generative AI models once demanded cloud muscle. Now we are facing a new rise of on Premises and LLMs running on local laptops. I have blogged about my favorite use cases for my HP EliteBook Copilot+ PC here. Then came Microsoft’s AI Toolkit for Visual Studio Code – a VS Code extension that lets you run AI models locally on devices like the HP EliteBook with Snapdragon X Elite’s NPU (Neural Processing Unit). This Extension was announced at Microsoft Build Conference and released now in a preview. DeepSeek R1 Distill to the mix, and suddenly, my dev workflow got quieter, faster, and cloud-free. Microsoft’s catalog lists Phi-3 and Llama 3 as first NPU-optimized models but in AI Toolkit in VS Code DeepSeek R1 is the first LLM for NPUs.

Model Catalog Mastery: Your One-Stop Generative AI Shop

Azure AI Foundry, Hugging Face & Ollama – Unified

The AI Toolkit for VS Code aggregates models from top catalogs:

- Azure AI Foundry: Enterprise-grade models like Phi-3

- Hugging Face: > 1400 Community gems LLMs

- Ollama: Local favorites (Llama 3, DeepSeek R1 Distilled)

Why It Matters:

- Test SLMs (Small Language Models) against “major player” GPT-4-level models in the playground

- Download models optimized for CPU/GPU/NPU with one click

Fine-Tuning Without Cloud Tax: NPU > GPU

QLoRA + Snapdragon X Elite = Offline Magic

While the AI toolkit supports cloud Azure training, its real superpower? Fine-tuning locally:

- 4-bit quantized models run at 14W on the EliteBook’s NPU

- DeepSeek R1 Distill adapts to codebases 3x faster than CPU-bound models

- What is QLoRa? (Medium Blogpost)

Case Study:

I fine-tuned a customer support model using:

- Tools and models from the Visual Studio Marketplace

- Local REST API endpoints for real-time testing

Result: 89% accuracy without a single cloud AI model service call.

Generative AI App Development That Doesn’t Lag

From Prompt to Production – All Inside VS Code

The AI Toolkit simplifies generative AI app development by:

- Testing applications locally via OpenAI Chat Completions-compatible API

- Packaging models as ONNX Runtime executables (requires model conversion steps)

- Bringing your own model (BYOM) from GitHub repos

Workflow Example:

- Get started with AI Toolkit: Install via Visual Studio Marketplace

- Download a model optimized for Copilot+ PCs

- Run the model via local REST API web server

Tools and Models for Every Workflow

Beyond LLMs: AI Toolkit’s Hidden Arsenal

- Embedding Models: Convert text to vectors with BAAI/bge-small-en (NPU-accelerated)

- Multi-Modal: LLaVA for image QA – no cloud reliance

- Debugging: VS Code’s native debugger for ONNX runtime errors

- The AI Toolkit for VS Code supports cross-platform use cases (Windows/Linux/macOS) beyond NPU-accelerated workflows, enables BYOM flexibility via integration with Ollama, Hugging Face, and custom ONNX models, and streamlines fine-tuning workflows using QLoRA and PEFT for adapting models to specialized tasks on local GPUs or cloud environments

Pro Tip: Use Microsoft Learn’s AI Toolkit overview to master hybrid (local/cloud) pipelines.

Requirements

- Windows 11 24H2+

- VS Code 1.85+

- NPU driver updates

Model Limitations: Microsoft notes most NPU-optimized models are <7B parameters

Step-by-Step Guide

- Download AI Toolkit from Visual Studio Marketplace

- Choose a model which supports NPU like DeepSeek R1 Distill

- Run locally on EliteBook’s NPU

- Test your application locally using OpenAI-compatible API

- Use local REST API server for testing without cloud dependencies

- Deploy and scale to Azure Container Apps when ready

Resource Checklist:

- Microsoft Learn: AI Toolkit modules

- Announcement AI Toolkit

- GitHub: Sample genai projects

- Azure AI Foundry docs

- Developing Responsible Generative AI Applications and Features on Windows

Current Updates (February 2025)

• OpenAI o1 model freely integrable via GitHub

• Prompt templates with variables for bulk runs

• Chat history storage as local JSON files

The Big Picture: Why Local AI might win & Your Laptop is Now an AI Lab

- Security: No data leaves your NPU/CPU-powered device

- Cost: Avoid cloud AI model service fees during prototyping

- Speed: Inference at 45 TOPS beats most GPU setups

As Microsoft’s toolkit approaches 1.0 release, one truth emerges: AI development isn’t migrating to the cloud – it’s coming home.With the AI Toolkit for Visual Studio Code, HP EliteBook’s Snapdragon X Elite, and DeepSeek R1, I’ve debugged models at 30,000 feet, fine-tuned SLMs in coffee shops, and built generative AI apps without once hearing “Your cloud quota is exhausted.”

There are Thousands of Laptops available on the market but only a very few are supporting this scenario described above:

Find more about HP EliteBook in our Bechtle Shop

- HP EliteBook Ultra G1q X Elite 16/512 GB AI : 1299 EUR

- HP EliteBook Ultra G1q X Elite 16 GB/1 TB AI: 1349 EUR

Talk to us at HanseVision about your AI plans and requirements (Copilot Agents, M365 Copilot, Hybrid AI or local AI running on your laptop)

One Comment